| ID |

Date |

Author |

Type |

Category |

Subject |

|

48

|

28 Oct 2019 06:28 |

Simone Stracka | Problem | Software | Need python3 package tkinter on DAQ pc |

We need python3 package tkinter installed on ds-proto-daq in order to run the steering module GUI.

I don't have root privileges.

|

|

47

|

27 Oct 2019 06:08 |

Simone Stracka | Configuration | Hardware | Converters installed in VME crate |

People: Edgar, Simone

Installed differential to single-ended converters in VME crate, and turned crate back on. elog:47/2

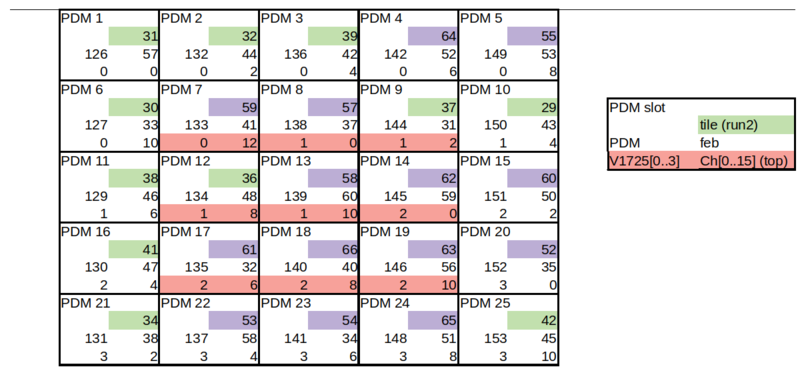

Channel count in the converters is 0 to 15 starting from the low end. Wired according to:

left board, channels 0..15 = PDM slot 1..16

right board, channels 0..8 = PDM slot 17..25

N.B. V1725 board #0 logic level is set to TTL (boards #1, #2, #3 to NIM) elog:47/3

In ODB / Equipment / V1725_Data00 / Settings / set Channel Mask to 0x1555 for all V1725 boards (enables channels 0 2 4 6 8 10 12)

(board 0 receives 7 PDM inputs, boards 1,2,3 receive 6 PDM each)

In https://ds-proto-daq.cern.ch/chronobox/ , set Enable Channel [ch_enable] = 0x3F3F3F7F , Channel Assignment [ch_assign] = 0x00393340.

9 central PDM's assigned to "top" group, external PDM's assigned to "bottom" group elog:47/1

Wiki instructions with the script to get the CDM back into a sensible state seem outdated. The variables seem fine, though ...

[dsproto@ds-proto-daq ~]$ esper-tool read 192.168.1.5 cdm ext_clk

[49999632]

[dsproto@ds-proto-daq ~]$ esper-tool read 192.168.1.5 lmk pll1_ld

[1]

[dsproto@ds-proto-daq ~]$ esper-tool read 192.168.1.5 lmk pll2_ld

[1]

|

| Attachment 1: PDMadcCh.png

|

|

| Attachment 2: converters.jpg

|

|

| Attachment 3: adc0_ttl.jpg

|

|

|

46

|

26 Oct 2019 07:33 |

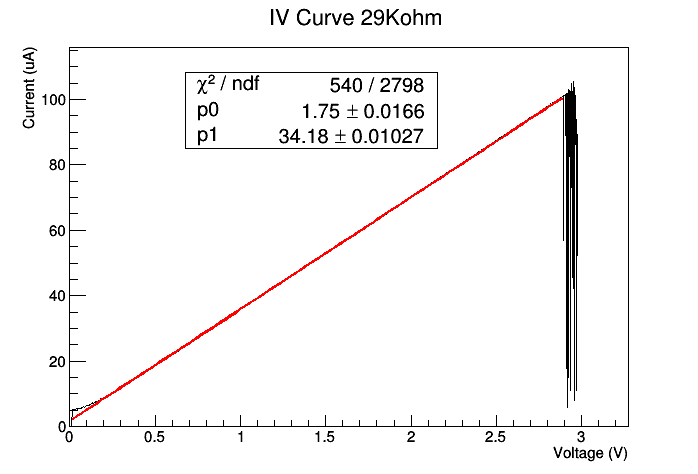

Sam Hill | Problem | Software | Test of IV Script |

People: Simone, Sam, Tom, Edgar

Tested IV curve python script that runs using MIDAS. Measured resistance consistant with resistor specification. elog:46/1

The resistance is attached to ch11, and we called the script (channels go from 0 to 24):

chnum=11 ; python3 iv_curve.py --no_steering --caen_chan $chnum --stop_v 3 --step_v 0.001 --filename ivcurve_`date +%s`_$chnum.txt

Issues:

No readout of trip condiotion therefore python script overides power supply trip and attemps to bring back voltage to next value. Potentially dangerous. Script only terminates with the final voltage value. See attached plot elog:46/1

Script terminated with error message:

Traceback (most recent call last):

File "iv_curve.py", line 287, in <module>

iv.run()

File "iv_curve.py", line 269, in run

self.set_caen_and_wait_for_readback(voltage)

File "iv_curve.py", line 223, in set_caen_and_wait_for_readback

raise RuntimeError("CAEN HV didn't respond to request to set voltage to %s; latest readback voltage is %s" % (voltage, rdb))

RuntimeError: CAEN HV didn't respond to request to set voltage to 2.976; latest readback voltage is 0.052000001072883606

|

| Attachment 1: IV_curve_29K.png

|

|

|

45

|

25 Oct 2019 07:44 |

Simone Stracka | Routine | Hardware | MB2 activities on October 25 |

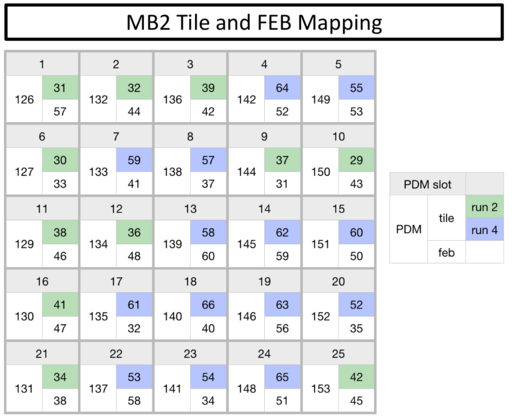

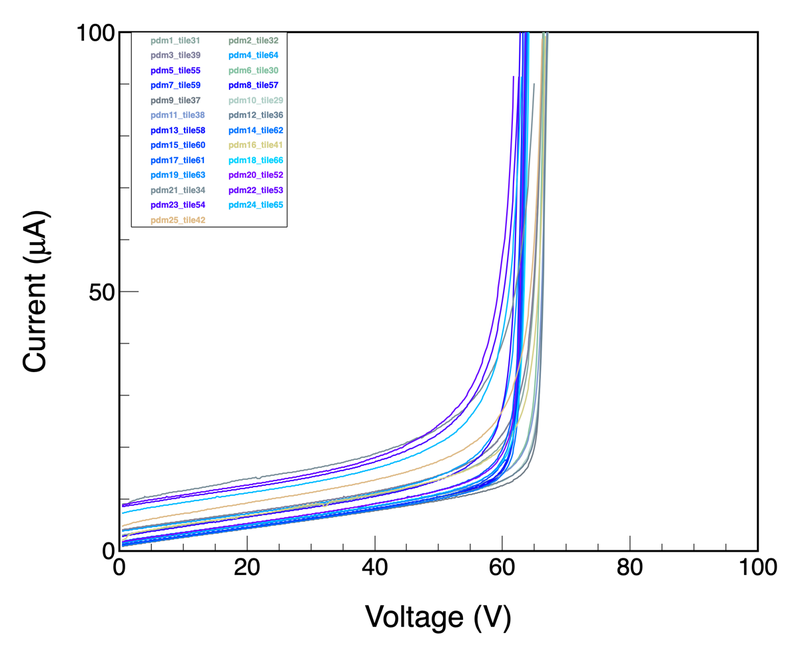

Crew: Edgar Sanchez Garcia, Sam Hill, Simone Stracka, Tom Thorpe, Yi Wang

Morning: Set up equipment for the IV curve measurements. We enable the interlock system in the SMU in order to go higher than 42 V (handle with care).

Afternoon: Measuring IV curves. Note there is a voltage drop in the LV cable that needs to be compensated for.

Chan 1 is set at +3.3V drawing 1.46A; sense line reads +2.39V

Chan 2 is set at -3.3V drawing 1.46A; sense line reads -2.39V

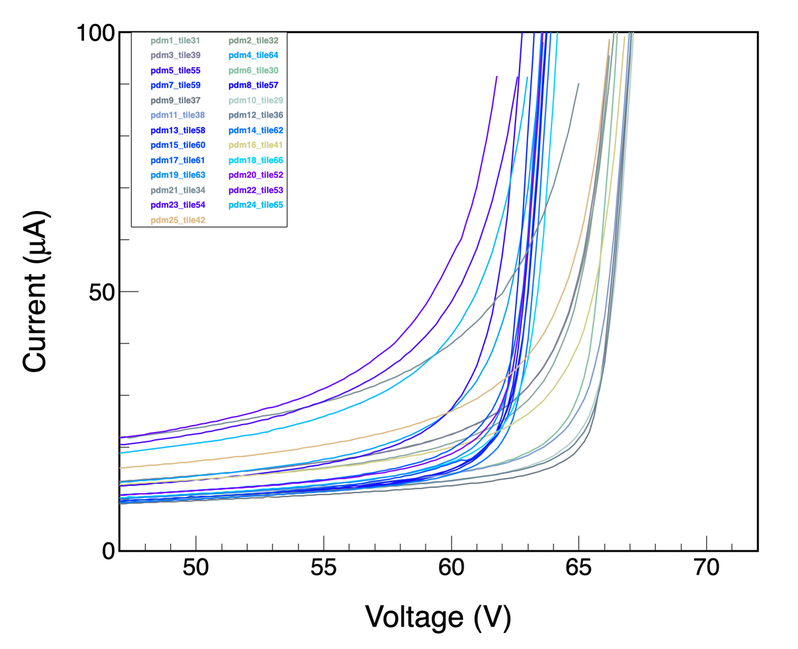

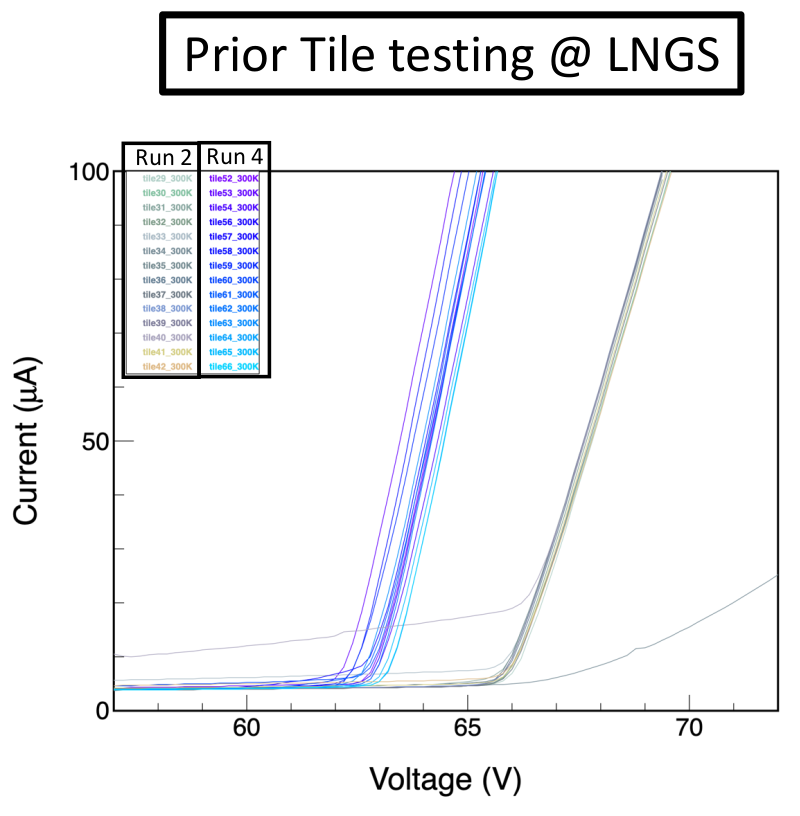

IV curves are scanning from 0-80V in 200mV steps w/ 100uA limit: elog:45/2 , elog:45/3

The two runs are visible ( elog:45/1 for color code and mapping )

Compare to elog:45/4 , obtained when probing individual tiles under more controlled light conditions |

| Attachment 1: TileFEBMapping_MB2.png

|

|

| Attachment 2: iv_warm_1.png

|

|

| Attachment 3: iv_warm_1_zoom.png

|

|

| Attachment 4: IndividualTileAtLNGS.png

|

|

|

44

|

25 Oct 2019 07:17 |

Simone Stracka | Routine | Hardware | Photoelectronics activities on October, 24 |

Crew: Edgar Sanchez Garcia, Sam Hill, Simone Stracka, Tom Thorpe, Yi Wang

Morning: Tested photocurrents for MB2 on tabletop using SMU and Keithley switching matrix. All measured between 1-10 microAmps.

Afternoon: Tested PDU mounting procedure with TPC assembled and with the mockup. No showstoppers. This will be the procedure for installation

With the mockup on the table we double checked the resistances. Confirmed all values are as before cooling indicating no additional damage to the wire-bondings.

|

|

43

|

16 Jul 2019 03:53 |

Marco Rescigno | Routine | General | run 774, scintillation events triggering on all 24 channels and 200 us window |

Majority of 8, threshold at 1000 ADC count, 24 channels enabled (16 trigger signals) 10 Hz, 200 us window, trigger at 9.5 microseconds.

10 k events recorded.

By direct inspection pileup seems at the 10 % level in the 200 us window. |

| Attachment 1: PileupEvent.png

|

|

|

42

|

16 Jul 2019 03:51 |

Marco Rescigno | Routine | General | run 773, scintillation events triggering on all 24 channels |

Majority of 5, threshold at 1000 ADC count, 24 channels enabled (16 trigger signals) 100 Hz, 30 us window.

38 k events recorded. |

|

41

|

16 Jul 2019 03:17 |

Marco Rescigno | Routine | General | run 772, scintillation events triggering on all channels |

Majority of 5, threshold at 1000 ADC count, 13 channels enabled (10 trigger signals) 50 Hz, 30 us window.

58 k events recorded. |

|

40

|

16 Jul 2019 02:41 |

Marco Rescigno | Problem | Trigger | Busy handling |

This morning tried to get some data with Vbias=65 V, which seems better for the SiPM in MB1.

Busy handling still erratic.

At the beginning it seemed to work fine with busy_invert off and busy_enable on.

After a while (maybe after the first busy signal?) the only work around seemed to be to disable the busy_enable |

|

39

|

16 Jul 2019 02:39 |

Marco Rescigno | Routine | General | run 771, scintillation events triggering on ch11 and ch12 of board0 |

Trigger setup with a threshold of 1500 ADC count wrt to baseline, on just two channels.

This is also to limit the trigger to 50 Hz, since the busy still did not work.

100 K events on disk. |

|

38

|

16 Jul 2019 01:58 |

Marco Rescigno | Routine | General | Run 766 (Laser) |

Laser Run with Vbias=65 V , new recommended value from PE group

500 k events acquired |

|

37

|

13 Jul 2019 14:50 |

Marco Rescigno | Routine | General | Long Laser run on disk |

Run 747 , 250 k events

as requested by alessandro 40% post trigger, acquisition window 16 us.

|

|

36

|

12 Jul 2019 11:34 |

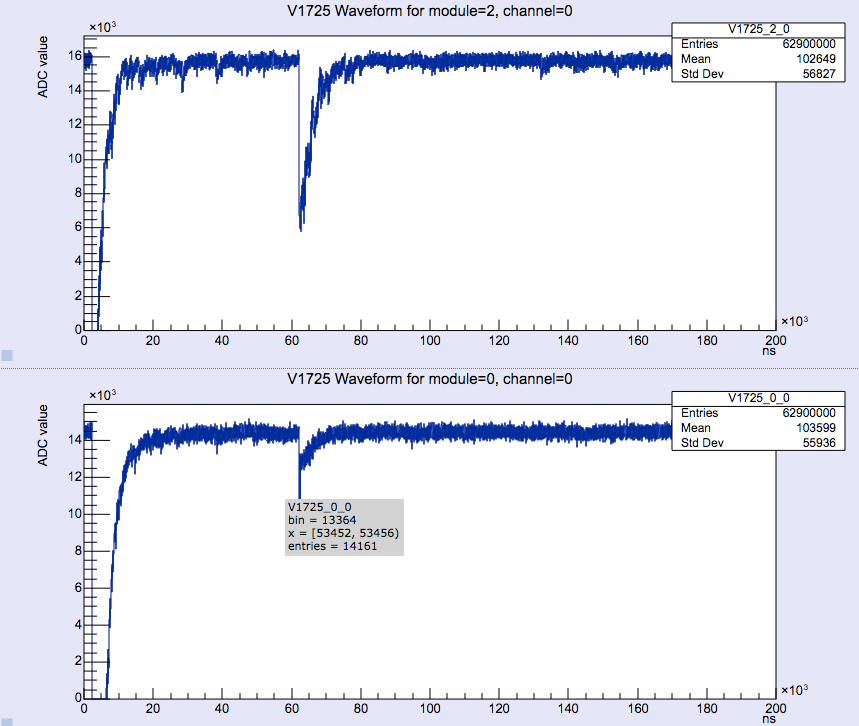

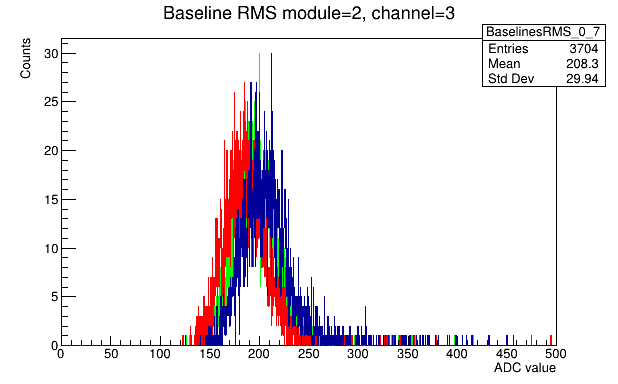

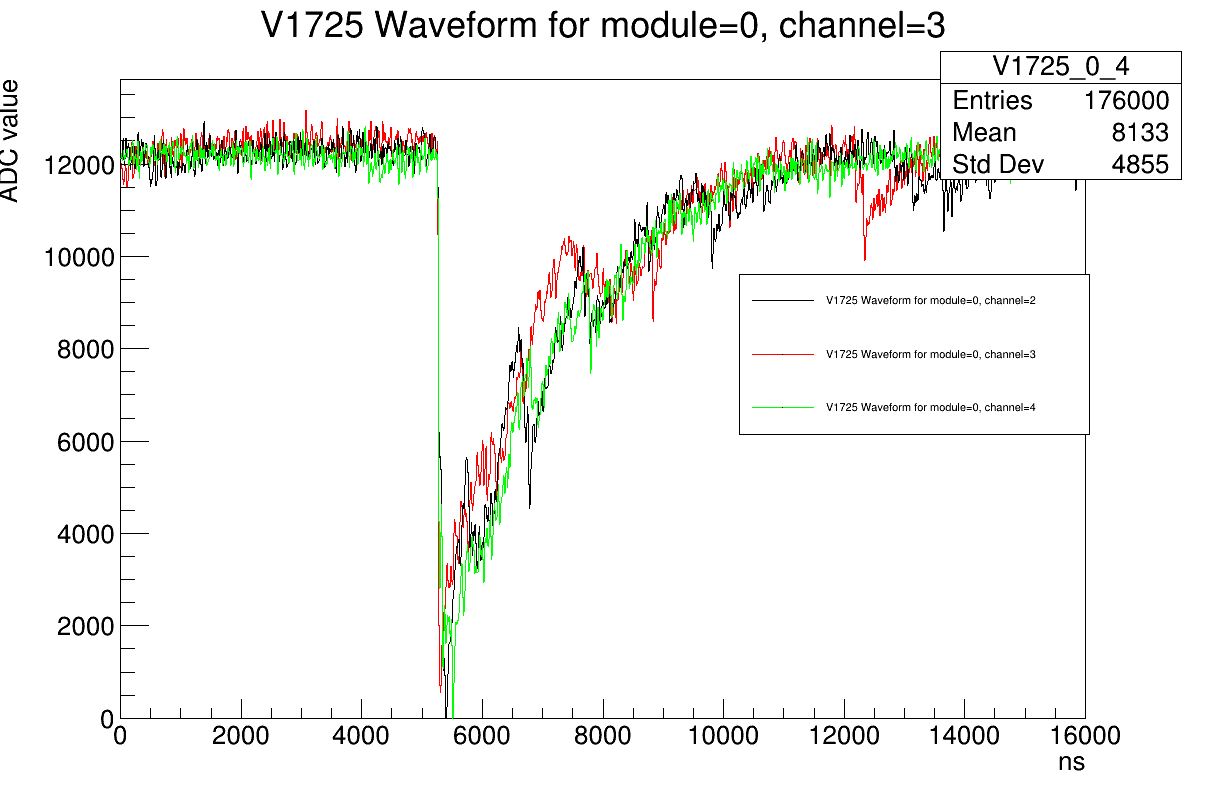

Marco Rescigno | Routine | General | MB1 test in proto-0 setup/2 Run 718 |

Run 718 has 25 channel readout Board 0 ch 0-15 and Board 0 ch 0-7.

Laser triggered, some noise, maybe not so bad as is look here.

RMS of baseline is 200 ADC count. Single PE peak is about 100-200 ADC count from baseline.

Looking carefully, some very nice scintillation event are found.

|

| Attachment 1: RMS_run718.gif

|

|

| Attachment 2: SPE.gif

|

|

| Attachment 3: Scintillation.gif

|

|

|

35

|

12 Jul 2019 07:58 |

Marco Rescigno | Routine | General | MB1 test in proto-0 setup/day 2 |

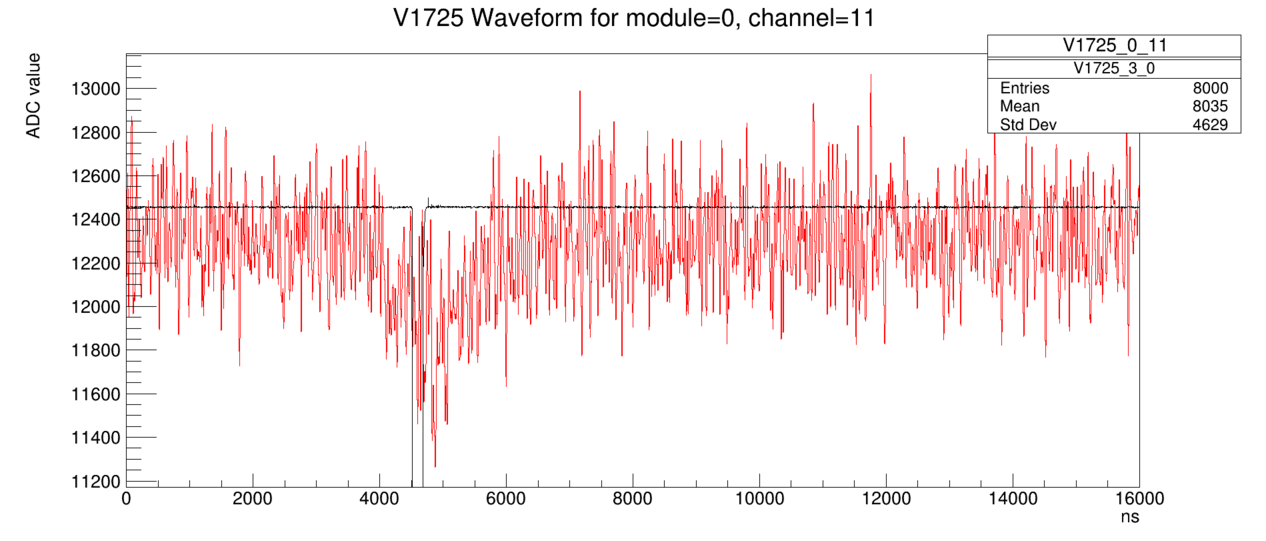

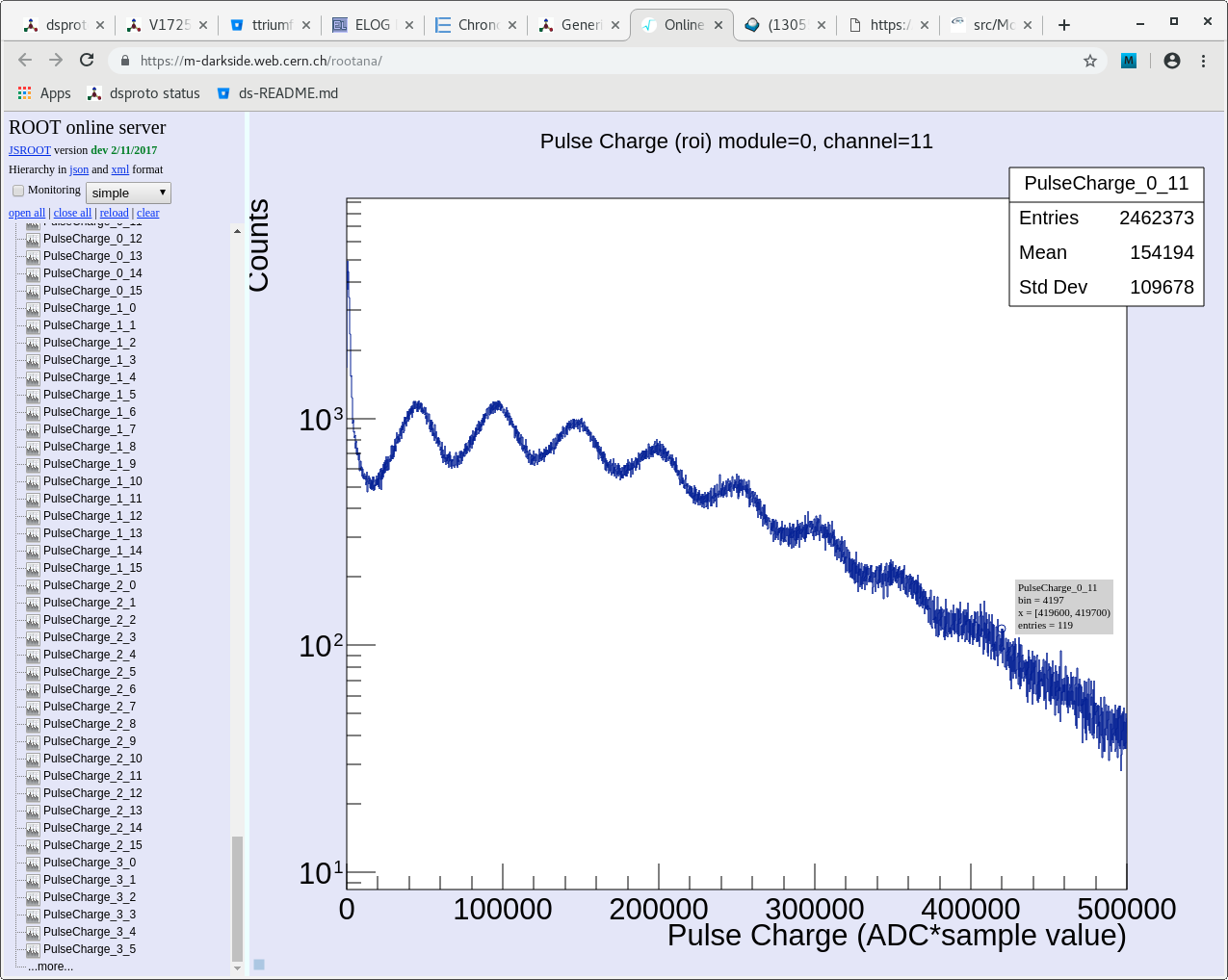

Implemented a simple charge integration on the midas display, most of the channels look almost as good as this one.

Nothing on the daq side changed, a part from the DAC value moved to 5000, allowing a slightly greater dynamic range. |

| Attachment 1: Screenshot_from_2019-07-13_17-45-14.png

|

|

|

34

|

12 Jul 2019 06:01 |

Marco Rescigno | Routine | General | Run 703 |

Run 703 is being writte on disk.

16 channels of board 00.

Laser trigger at 10 Hz

ended with

[feoV1725.cxx:1033:read_trigger_event,ERROR] Error: did not receive a ZMQ bank. Stopping run. ] |

|

33

|

12 Jul 2019 02:05 |

Marco Rescigno | Problem | Trigger | MB1 test in proto-0 setup/1 |

Tried to get laser sync signal into chronobox (clkin1 input), apparently all triggers dropped by chronobox

Problem is that the same now happen also with the regular setup where the clkin1 signal is taken by the dual timer.

|

|

32

|

12 Jul 2019 01:27 |

Marco Rescigno | Configuration | | MB1 test in proto-0 setup/1 |

Changed custom size to 500 (20 us), tested ok run 681 |

|

31

|

11 Jul 2019 15:52 |

Thomas | Routine | Trigger | invert first chronobox busy signal |

The DAQ seems to be ready for tests with proto-0 tomorrow.

I had to invert the first busy input in order to get chronobox to produce triggers. I modified the setup script

setup_chronobox.sh

so this is now the default. Not sure why necessary.

Another interesting fact; it seems the chronobox only asks for a DHCP IP when it first boots. I think that

chronobox and ds-proto-daq were rebooted at the same time; ds-proto-daq dhcp server was probably not running

when chronobox asked for IP. chronobox got IP fine when it was power cycled. |

|

30

|

02 Jul 2019 18:01 |

Thomas | Routine | Software | MIDAS running again |

Darkside people seem to be doing some tests at CERN next week. It looks like they aren't going to use our DAQ (they will I think just use CAEN tools). But we took

opportunity to make TRIUMF DAQ work again. There was a couple issues

1) I needed to inver the busy signal for input 0 to the chronobox in order to get any triggers out of the system. Not sure why; I don't think I had to do that before. But it is

working now.

2) The CERN web proxy was not initially working. Somehow I 'fixed' it by changing the proxy configuration variable of SERVICE_NAME from 'midas' to 'dummy'. I don't

understand why that fixed it. But now you can see the DAQ here:

https://m-darkside.web.cern.ch/chronobox/ |

|

29

|

11 Apr 2019 08:20 |

Thomas | Routine | Trigger | Missing ZMQ banks |

I have done a couple longer tests of the DAQ setup. The runs were done with a high trigger rate of ~60Hz, with

the V1725 asserting their busy to throttle the trigger.

I found that after a couple hours (~5hours) that we would no longer be getting ZMQ packets from the chronobox.

You can see this with error message like

15:29:04.092 2019/04/11 [feov1725MTI00,ERROR] [feoV1725.cxx:1033:read_trigger_event,ERROR] Error: did not

receive a ZMQ bank. Stopping run.

10:26:37.158 2019/04/11 [mhttpd,INFO] Run #657 started

If I start a new run I am still missing ZMQ packets. However, if I restart the frontend program (and hence

re-initialize the ZMQ link), then the chronobox does start sending triggers again. So it may be more of a

problem with the ZMQ setup in the MIDAS frontend. Needs more investigation. |