| ID |

Date |

Author |

Type |

Category |

Subject |

|

52

|

28 Oct 2019 15:10 |

Simone Stracka | Configuration | Hardware | LV for steering module and current status of CAEN mainframe |

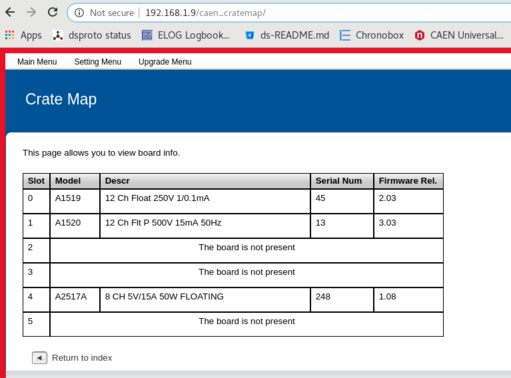

The HV board from Naples did not turn on: Yury gave it to the CAEN guys to check and/or bring back for replacement.

Yi and Luigi rented a new HV module (A1519). The HV module (A1520P) we used for tests of the I-V script is also present in the Mainframe (see below).

The first 24 channels (slots 0 and 1) are therefore HV. If the new HV does not show up on time we'll try and adapt the cables to work with A1519.

The A2517A module is LV. This is currently operated from the DAQ pc using CAEN_HVPSS_ChannelsController.jnlp (located in the Desktop/SteeringModule folder).

The three low voltage channels (0,1,2) should be turned on at the same time by setting Pw = ON.

Settings:

Channel 0 and 1: I0Set = 2.0 A , V0Set = 2.5 V , UNVThr = 0 V, OVVThr = 3.0 V, Intck = Disabled

IMon = 1.44 (this depends on the illumination) , VMon = 2.48 V , VCon = 2.79 V

Channel 2: I0Set = 1.0 A , V0Set = 5.0 V , UNVThr = 0 V, OVVThr = 5.5 V, Intck = Disabled

IMon = 0.07 , VMon = 4.998 V , VCon = 5.02 V

In case the channels trip they cannot be ramped back up unless the alarms are cleared.

|

| Attachment 1: CAENmainframe.png

|

|

|

1

|

11 Dec 2018 15:17 |

Thomas | Configuration | Software | Setup elog for ds-proto-daq |

1) Install and tweak elog:

[root@ds-proto-daq ~]# cat /etc/elogd.cfg

[global]

port = 8084

MTP host = trmail.triumf.ca

Use Email Subject = {$logbook} $Subject

Remove on reply = Author

Quote on reply = 1

URL = https://ds-proto-daq/elog/

[DS Prototype]

Theme = default

Comment = ELOG for DS Prototype MIDAS DAQ

Attributes = Author, Type, Category, Subject

Options Type = Routine, Problem, Problem Fixed, Configuration, Other

Options Category = General, Hardware, Digitizer, Trigger, MIDAS, Software, Other

Extendable Options = Category, Type

Required Attributes = Author, Type

Page Title = ELOG - $subject

Reverse sort = 1

Quick filter = Date, Type

Email all = lindner@triumf.ca

[root@ds-proto-daq ~]# systemctl start elogd.service

[root@ds-proto-daq ~]# systemctl is-active elogd.service

active

[root@ds-proto-daq ~]# systemctl enable elogd.service

2) Tweak apache and restart

[root@ds-proto-daq ~]# grep elog /etc/httpd/conf.d/ssl-ds-proto-daq.conf

ProxyPass /elog/ http://localhost:8084/ retry=1

[root@ds-proto-daq ~]# systemctl restart httpd

3) change MIDAS to use elog

[dsproto@ds-proto-daq bin]$ odbedit

[local:dsproto:R]/>cd Elog/

[local:dsproto:R]/Elog>create STRING URL

String length [32]: 256

[local:dsproto:R]/Elog>set URL https://ds-proto-daq.triumf.ca/elog/DS+Prototype/ |

|

2

|

11 Dec 2018 15:20 |

Thomas | Configuration | Software | Setup elog for ds-proto-daq |

1) Install and tweak elog:

[root@ds-proto-daq ~]# cat /etc/elogd.cfg

[global]

port = 8084

MTP host = trmail.triumf.ca

Use Email Subject = {$logbook} $Subject

Remove on reply = Author

Quote on reply = 1

URL = https://ds-proto-daq/elog/

[DS Prototype]

Theme = default

Comment = ELOG for DS Prototype MIDAS DAQ

Attributes = Author, Type, Category, Subject

Options Type = Routine, Problem, Problem Fixed, Configuration, Other

Options Category = General, Hardware, Digitizer, Trigger, MIDAS, Software, Other

Extendable Options = Category, Type

Required Attributes = Author, Type

Page Title = ELOG - $subject

Reverse sort = 1

Quick filter = Date, Type

Email all = lindner@triumf.ca

[root@ds-proto-daq ~]# systemctl start elogd.service

[root@ds-proto-daq ~]# systemctl is-active elogd.service

active

[root@ds-proto-daq ~]# systemctl enable elogd.service

2) Tweak apache and restart

[root@ds-proto-daq ~]# grep elog /etc/httpd/conf.d/ssl-ds-proto-daq.conf

ProxyPass /elog/ http://localhost:8084/ retry=1

[root@ds-proto-daq ~]# systemctl restart httpd

3) change MIDAS to use elog

[dsproto@ds-proto-daq bin]$ odbedit

[local:dsproto:R]/>cd Elog/

[local:dsproto:R]/Elog>create STRING URL

String length [32]: 256

[local:dsproto:R]/Elog>set URL https://ds-proto-daq.triumf.ca/elog/DS+Prototype/ |

|

3

|

13 Dec 2018 11:02 |

Thomas | Routine | Hardware | Testing V1725 digitizers |

Couple weeks of work documented in one elog...

V1725 serial numbers: 455, 392, 460, 462, 474

firmware: DPP-ZLE+

The 3818 kernel module not getting loaded on start-up... it is in /etc/rc.local, but not getting called

[root@ds-proto-daq ~]# grep 3818 /etc/rc.local

# Load A3818 driver...

/sbin/insmod /home/dsproto/packages/A3818Drv-1.6.0/src/a3818.ko

Called manually for now...

Reworked and cleaned up DEAP version of V1725 code

- Updated register map

- Removed smartQT code

Quick test: the V1725 seems to be getting busy at a maximum rate of 10kHz (with no samples being saved though).

Got preliminary documentation from CAEN for ZLE-plus firmware... posted here:

/home/dsproto/packages/CAEN_ZLE_Info

In particular, manual shows how the data structure is different for ZLE-plus data banks (as compared to V1720 ZLE data banks).

Fixed the /etc/rc.local setup so that the A3818 driver is loaded and mhttpd/mlogger is started on reboot.

Looking at V1725-ZLE register list. I don't entirely understand how we are going to do the trigger outputs and the busy. The manual makes it clear that the triggers from each pair of channels are combined together and send to the trigger logic. So there should be 8 trigger primitives from the board. The LVDS IO connector allows to configure groups of 4 outputs as being for either the trigger outputs or the busy/veto outputs. So I guess I will configure the first two groups to output the trigger primitives and the third group to output the BUSY information.

Added code to do the ADC calibration; added extra equipment for reading out ADC temperatures periodically.

Renewed the lets-encrypt SSL certificate... but haven't set up automatic renewal yet...

[root@ds-proto-daq ~]# certbot renew --apache

[root@ds-proto-daq ~]# systemctl restart httpd

Analyzer program basically working. Pushed to bitbucket:

https://bitbucket.org/ttriumfdaq/dsproto_analyzer/src |

|

4

|

09 Jan 2019 12:22 |

Thomas | Routine | General | V1725 LVDS outputs |

Pierre figured out that NIM crate not working. We now see LVDS outputs from the individual channels firing.

Each set of two different channels is ganged together into a single self-trigger output. By setting the

register 0x1n84 to 3 we enable so that if either input channel fires then the self-trigger for that group fires.

Bryerton provided CDM outputting 50MHz clock; all V1725s now running with external clock.

Modified frontend to readout 4 modules and 16 channels per module.

Still need to modify the analyzer to show data for all 4 modules. |

|

5

|

11 Jan 2019 11:07 |

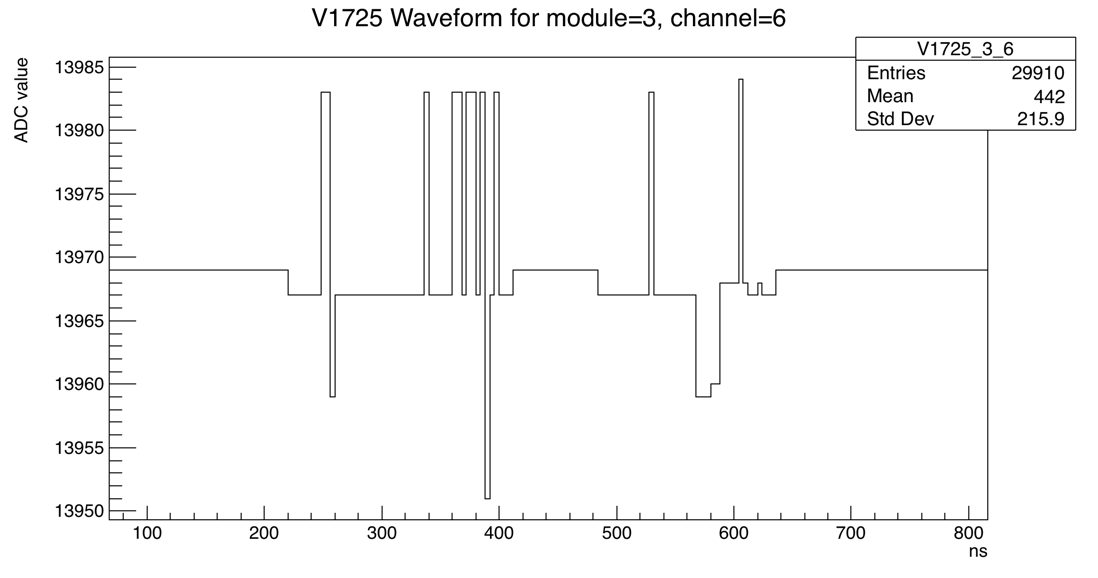

Thomas | Routine | Digitizer | Data corruption for ADC channels |

I modified the analyzer so that it shows data from all four modules.

I find that there is evidence of corruption of the V1725 ADC data on a couple channels. You can see an example of a waveform with corruption in the attachment. You see that there seems to be a bunch of fluctuations of exactly 16

ADC counts, which seems unphysical. So far I see this problem in these channels:

module 0, ch 0

module 0, ch 1

module 0, ch 13

module 1, ch 4

module 3, ch 6

I saw corruption similar to this on the V1730 readout. CAEN does have a scheme for ADC calibration (by poking a register), which worked well for the V1730. I thought that I implemented this ADC calibration for the V1725, but I might

have messed it. I'll look at this again. |

| Attachment 1: dsproto_corrupt.gif

|

|

|

6

|

17 Jan 2019 15:47 |

Thomas | Routine | Hardware | Added raided HD to ds-proto-daq |

Added pair of 10TB hard-drives in raid-1 to ds-proto-daq. MIDAS data files will get written to this raid volume.

[dsproto@ds-proto-daq tmp]$ df -h /data

Filesystem Size Used Avail Use% Mounted on

/dev/md0 9.1T 180M 8.6T 1% /data

[dsproto@ds-proto-daq tmp]$ cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 sdc1[1] sdb1[0]

9766435648 blocks super 1.0 [2/2] [UU]

[>....................] resync = 0.9% (88214784/9766435648) finish=4372.8min speed=36886K/sec

bitmap: 73/73 pages [292KB], 65536KB chunk

unused devices: <none> |

|

11

|

07 Feb 2019 17:30 |

Thomas | Routine | Software | Testing the maximum data throughput |

First check the maximum trigger rate and maximum data rate for different sample lengths (for each channels):

sample length Maximum rate MB/s CPU % (per thread)

16us 0.44kHz 231 20

6.4us 1.04kHz 214 20

3.2us 1.95kHz 198 22

1.6us 3.12kHz 160 26

0.8us 4.88kHz 127 30 (what are these threads doing?)

Look at the code more. See that there is a maximum size for the block transfer of 10kB. Increase this to 130kB

(which is the maximum amount that this board can make per event). Now find

sample length Maximum rate MB/s CPU % (per thread)

16us 0.68kHz 346 7

6.4us 1.46kHz 300 10

1.6us 3.6kHz 190 25

Good. So for long samples we are actually slightly above the maximum transfer rate of 85MB/s*4 = 340MB/s

Tried writing out the data to disk at the maximum 345MB/s rate; the DAQ can't keep up. Maximum rate was more

like 270MB/s.

I think the mlogger was actually fine. But I think the write-to-disk speed of the harddrive could not keep up.

So I think we are limited

by hardware in that case. We would need a large raid array to be able to write faster. |

|

12

|

08 Feb 2019 12:08 |

Thomas | Problem | Hardware | Installed Marco's A3818; didn't work |

I installed Marco's A3818 PCIe card. Didn't seem to work. I got communication errors talking to link 2. The

communication problems didn't happen right away, but happened once the run started.

I swapped the fibres going to port 2 and port 3 on the A3818. The problem stayed with port 2. So I conclude

that this A3818 module is no good. |

|

15

|

05 Mar 2019 10:36 |

Thomas | Routine | Digitizer | Switched to standard V1725 firmware |

It turns out that the ZLE V1725 firmware we are using only supports reading out up to 4000 samples per channel.

We need 80000 samples to readout 200us, which is requirement.

So we switch the V1725s to use the backup firmware on the board, which is the standard waveform firmware.

Firmware version is 17200410.

Expected data size for event with 200us of data: 200000ns * 1/4 ns/sample * 16 ch * 4 boards * 2 bytes/sample =

6.4MB per event

Measured max event rate of 60Hz with 385MB/s with 200us readout.

Needed to increase max event size, set buffer organization to 6 and set almost full to 32 in order to

accommodate the larger event size.

Changed some registers for different firmware:

- V1725_SELFTRIGGER_LOGIC

More tests needed |

|

16

|

05 Mar 2019 14:20 |

Thomas | Routine | Hardware | Installed network card |

I installed a PCIe 1Gbps network card and configured it as a private network. The PC (ds-proto-daq) is

192.168.1.1. I guess we can make the chronobox 192.168.1.2.

[root@ds-proto-daq ~]# ifconfig enp5s0

enp5s0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.1 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::bb27:5db:f778:d584 prefixlen 64 scopeid 0x20<link>

ether 68:05:ca:8e:66:5c txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 27 bytes 4145 (4.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 17 memory 0xf72c0000-f72e0000 |

|

17

|

06 Mar 2019 14:16 |

Thomas | Routine | General | Retested the chronobox trigger logic |

I retested the chronobox trigger generation:

1) Inserting moderate sized pulse into channel 8 of V1725-0.

2) Configured threshold of V1725 so that channel triggers LVDS pulse into chronobox

3) Fan-out trigger out from chronobox to all V1725s.

4) busy signal from each V1725 fed into the chronobox

5) Start run, then push trigger from 20Hz up to 200Hz

6) System running stably! Actual trigger rate about 60.2Hz. The almost_full condition is set to 32 on the

V1725s and the estored on each board fluctuates below 32.

https://ds-proto-daq.triumf.ca/HS/Buffers/eStored?hscale=300&fgroup=Buffers&fpanel=eStored&scale=10m

The busy light on the V1725 never comes on, good.

The next thing we need is some way to start/stop the trigger generation on the chronobox, so that at

begin-of-run triggers do not get sent before the V1725s are finished configuration.

Other notes:

a) It turned out that the register to set the V1725 channel trigger threshold was different for RAW vs ZLE

firmware (0x1080 vs 0x1060); after fixing that the channel threshold seemed to work as expected.

b) Map for chronobox NIM cables:

channel 1-4: busy IN from V1725

channel 5,7,8: trigger OUT from chronobox

channel 6: clock OUT from chronobox |

|

20

|

11 Mar 2019 12:30 |

Thomas | Routine | Trigger | New chronobox firmware; run start/stop implemented |

a) Bryerton implemented new version of the firmware. New features:

1) run start/stop state

2) at run start 6 events are sent with 200ms separation

3) there is a greater set of counters about trigger, as well as configuring the trigger.

b) chronobox webpage can be seen here:

https://ds-proto-daq.triumf.ca/chronobox/

The mod_tdm page gives configuration of run state and trigger. In particular

- button to start/stop run

- button to do manual trigger

- configure which channels are TOP and which are BOTTOM

- configure the number of TOP or BOTTOM channels that need to figure

- DecisionType: true= TOP AND BOTTOM groups must fire; false = TOP OR BOTTOM groups can fire.

c) Start and stop run can also be done on command line with esper tool:

esper-tool write -d true 192.168.1.3 mod_tdm run

esper-tool write -d false 192.168.1.3 mod_tdm run

d) I modified the V1725 frontend to integrate the chronobox start/stop.

At run start

- configure V1725s

- start chronobox with the command-line esper-tool call

At end run

- add deferred transition function which stops run (with esper-tool), then checks whether all the events have

cleared from ring buffers.

- once ring buffers are cleared, finish stopping the V1725s.

In the long run should somehow do the start/stop commands with some http post command, rather than command line

to esper-tool.

e) With the start/stop run could start to compare the timestamps of V1725s and confirm that they matched (ie,

all V1725s got reset at the same time). Also, all the events are cleared from buffers.

f) However, I found that the frontend program still consistently failed with this error when the trigger rate

was above the maximum sustainable:

Deferred transition. First call of wait_buffer_empty. Stopping run

[feov1725MTI00,ERROR] [v1725CONET2.cxx:685:ReadEvent,ERROR] Communication error: -2

[feov1725MTI00,ERROR] [feoV1725.cxx:654:link_thread,ERROR] Readout routine error on thread 0 (module 0)

[feov1725MTI00,ERROR] [feoV1725.cxx:655:link_thread,ERROR] Exiting thread 0 with error

Stopped chronobox run; status = 0

Segmentation fault

Next steps:

1) Fix the seg-fault for high rate running.

2) More detailed timestamp checking, with cm_msg(ERRORs)

3) Bryerton is now working on the event FIFO on chronobox. That will be next thing to integrate. |

|

21

|

11 Mar 2019 15:27 |

Thomas | Routine | Trigger | New chronobox firmware; run start/stop implemented |

> f) However, I found that the frontend program still consistently failed with this error when the trigger rate

> was above the maximum sustainable:

>

> Deferred transition. First call of wait_buffer_empty. Stopping run

> [feov1725MTI00,ERROR] [v1725CONET2.cxx:685:ReadEvent,ERROR] Communication error: -2

> [feov1725MTI00,ERROR] [feoV1725.cxx:654:link_thread,ERROR] Readout routine error on thread 0 (module 0)

> [feov1725MTI00,ERROR] [feoV1725.cxx:655:link_thread,ERROR] Exiting thread 0 with error

> Stopped chronobox run; status = 0

> Segmentation fault

I sort of 'fix' this problem. There is some sort of problems between the V1725 readout thread and the system call

to esper-tools to stop the run. Some collision between the system resources for these two calls causes the readout

thread ReadEvent call to fail. I 'fix' the problem by adding in the end_of_run part a 500us pause of the readout

threads before I make the system call to esper-tool.

Odd. In principle I think that the system calls and the readout threads are running on different cores. So not

clear what the problem was. Should figure out better diagnosis and fix problem properly. |

|

25

|

03 Apr 2019 15:11 |

Thomas | Routine | Software | CERN SSO proxy for ds-proto-daq |

Pierre and I got the CERN proxy setup for the Darkside prototype.

Using your CERN single-sign-on identity, you should be able to login to this page

https://m-darkside.web.cern.ch/

and see our normal MIDAS webpage.

The CERN server is proxying the port 80 on ds-proto-daq. You can also see all the other services through the

same page:

elog:

https://m-darkside.web.cern.ch/elog/DS+Prototype/

chronobox:

https://m-darkside.web.cern.ch/chronobox/

js-root:

https://m-darkside.web.cern.ch/rootana/

_________________________________

Technical details

1) We followed these instructions for creating a SSO-proxy:

https://cern.service-now.com/service-portal/article.do?n=KB0005442

We pointed the proxy to port 80 on ds-proto-daq

2) On ds-proto-daq, we needed to poke a hole through the firewall for port 80:

firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="188.184.28.139/32" port

protocol="tcp" port="80" accept"

firewall-cmd --reload

[root@ds-proto-daq ~]# firewall-cmd --list-all

public (active)

...

rule family="ipv4" source address="188.184.28.139/32" port port="80" protocol="tcp" accept

This firewall rule is pointing to some particular IP that seems to be the proxy side of the server:

[root@ds-proto-daq ~]# host 188.184.28.139

139.28.184.188.in-addr.arpa domain name pointer oostandardprod-7b34bdf1f3.cern.ch.

It is not clear if this particular IP will be stable in long term.

3) We needed to modify mhttpd so it would serve content to hosts other than localhost. So changed mhttpd

command from

mhttpd -a localhost -D

to

mhttpd -D |

|

26

|

03 Apr 2019 15:31 |

Thomas | Routine | Software | test of elog |

The last elog didn't go out cleanly. Modified the elogd.cfg to point to the proxy. Try again. |

|

27

|

08 Apr 2019 08:31 |

Thomas | Routine | General | General work - day 1 at CERN |

Notes on day:

1) Fixed the problem with the network interfaces. Now the computer boots with the correct network configuration; outside world visible

and private network on.

2) fan tray on VME crate seemed to be broken. Got another VME crate from pool and installed it. This VME crate seems to be working

well.

3) Recommissioned the DAQ setup. Found a couple small bugs related to the V1725 self-trigger logic. Fixed those and the V1725 self-

triggers seem to be working correctly.

4) Tried to install new CDM from TRIUMF (with ssh access), but clocks didn't stay synchronized. Will bring module back to TRIUMF.

5) Added some code to V1725 frontend for clearing out the ZMQ buffers of extra events at the end of the run. This is to protect

against the case where the chronobox is triggering too fast for the V1725s. |

|

28

|

10 Apr 2019 05:40 |

Thomas | Routine | General | General work - day 3 at CERN |

Several points:

1) Gave a series of tutorial on DAQ to DS people yesterday and today. Got a bunch of feedback, which I will pass on when I'm back at TRIUMF.

2) The computer ds-proto-daq was offline when I got in to lab this morning. Hmm, not clear what is wrong with computer. Didn't happen the

first day. Maybe another power blip? Maybe we need a UPS for this DAQ machine, to protect it from power blips.

3) Using instructions from Luke, reconfigured the CDM to use the clock from the chronobox.

4) Added scripts for putting the chronobox and the CDM into a sensible state. Scripts are

/home/dsproto/online/dsproto_daq/setup_chronobox.sh

/home/dsproto/online/dsproto_daq/setup_cdm.sh

The scripts need to be rerun whenever the chronobox or VME crate are power cycled.

5) Fix some bugs and added some new plots to online monitoring. In particular, added a bunch of plots related to the chronobox data.

6) Found some problems with monitoring of chronobox trigger primitives, which I passed onto Bryerton. |

|

29

|

11 Apr 2019 08:20 |

Thomas | Routine | Trigger | Missing ZMQ banks |

I have done a couple longer tests of the DAQ setup. The runs were done with a high trigger rate of ~60Hz, with

the V1725 asserting their busy to throttle the trigger.

I found that after a couple hours (~5hours) that we would no longer be getting ZMQ packets from the chronobox.

You can see this with error message like

15:29:04.092 2019/04/11 [feov1725MTI00,ERROR] [feoV1725.cxx:1033:read_trigger_event,ERROR] Error: did not

receive a ZMQ bank. Stopping run.

10:26:37.158 2019/04/11 [mhttpd,INFO] Run #657 started

If I start a new run I am still missing ZMQ packets. However, if I restart the frontend program (and hence

re-initialize the ZMQ link), then the chronobox does start sending triggers again. So it may be more of a

problem with the ZMQ setup in the MIDAS frontend. Needs more investigation. |

|

30

|

02 Jul 2019 18:01 |

Thomas | Routine | Software | MIDAS running again |

Darkside people seem to be doing some tests at CERN next week. It looks like they aren't going to use our DAQ (they will I think just use CAEN tools). But we took

opportunity to make TRIUMF DAQ work again. There was a couple issues

1) I needed to inver the busy signal for input 0 to the chronobox in order to get any triggers out of the system. Not sure why; I don't think I had to do that before. But it is

working now.

2) The CERN web proxy was not initially working. Somehow I 'fixed' it by changing the proxy configuration variable of SERVICE_NAME from 'midas' to 'dummy'. I don't

understand why that fixed it. But now you can see the DAQ here:

https://m-darkside.web.cern.ch/chronobox/ |