| ID |

Date |

Author |

Type |

Category |

Subject |

|

21

|

11 Mar 2019 15:27 |

Thomas | Routine | Trigger | New chronobox firmware; run start/stop implemented |

> f) However, I found that the frontend program still consistently failed with this error when the trigger rate

> was above the maximum sustainable:

>

> Deferred transition. First call of wait_buffer_empty. Stopping run

> [feov1725MTI00,ERROR] [v1725CONET2.cxx:685:ReadEvent,ERROR] Communication error: -2

> [feov1725MTI00,ERROR] [feoV1725.cxx:654:link_thread,ERROR] Readout routine error on thread 0 (module 0)

> [feov1725MTI00,ERROR] [feoV1725.cxx:655:link_thread,ERROR] Exiting thread 0 with error

> Stopped chronobox run; status = 0

> Segmentation fault

I sort of 'fix' this problem. There is some sort of problems between the V1725 readout thread and the system call

to esper-tools to stop the run. Some collision between the system resources for these two calls causes the readout

thread ReadEvent call to fail. I 'fix' the problem by adding in the end_of_run part a 500us pause of the readout

threads before I make the system call to esper-tool.

Odd. In principle I think that the system calls and the readout threads are running on different cores. So not

clear what the problem was. Should figure out better diagnosis and fix problem properly. |

|

22

|

27 Mar 2019 08:57 |

Pierre | Configuration | Hardware | CERN setup |

Found that the Trigger out from the CB is on output1

Trigger / Not used

Clock / Not used

Set frontend to use NIM in/out instead of TTL as there is a nice

NIM-TTL-NIM adaptor CAEN Nim module available |

|

23

|

27 Mar 2019 14:04 |

Pierre | Configuration | Trigger | Time stamp sync |

The ChronoBox latest FW is loaded. Let tme know if this is what the chronobox should look like in term of registers.

Are we monitoring the PLL Lock Loss (odb: /DEAP Alarm ?)

Here is the dump of the 5 first sync triggers without any physics trigger behind.

1 0x830b577 0x17d7855 0x6b33d22 0x6b33d22 8.992791

2 0x830b577 0x17d7855 0x6b33d22 0x6b33d22 8.992791

3 0x830b577 0x17d7855 0x6b33d22 0x6b33d22 8.992791

4 0x830b577 0x17d7855 0x6b33d22 0x6b33d22 8.992791

1 0x830b581 0x2faf097 0x535c4ea 0x535c4ea 6.992792

2 0x830b581 0x2faf097 0x535c4ea 0x535c4ea 6.992792

3 0x830b581 0x2faf095 0x535c4ec 0x535c4ec 6.992792

4 0x830b581 0x2faf095 0x535c4ec 0x535c4ec 6.992792

1 0x830b587 0x47868d7 0x3b84cb0 0x3b84cb0 4.992792

2 0x830b587 0x47868d7 0x3b84cb0 0x3b84cb0 4.992792

3 0x830b587 0x47868d7 0x3b84cb0 0x3b84cb0 4.992792

4 0x830b587 0x47868d7 0x3b84cb0 0x3b84cb0 4.992792

1 0x830b5a1 0x5f5e119 0x23ad488 0x23ad488 2.992794

2 0x830b5a1 0x5f5e119 0x23ad488 0x23ad488 2.992794

3 0x830b5a1 0x5f5e117 0x23ad48a 0x23ad48a 2.992794

4 0x830b5a1 0x5f5e117 0x23ad48a 0x23ad48a 2.992794

1 0x830b5ab 0x7735959 0xbd5c52 0xbd5c52 0.992795

2 0x830b5ab 0x7735959 0xbd5c52 0xbd5c52 0.992795

3 0x830b5ab 0x7735959 0xbd5c52 0xbd5c52 0.992795

4 0x830b5ab 0x7735959 0xbd5c52 0xbd5c52 0.992795

With the physics triggers:

1 0x830b5c1 0x1834497f 0xeffc6c42 0x100393be 21.493591

2 0x830b5c1 0x1834497f 0xeffc6c42 0x100393be 21.493591

3 0x830b5c1 0x1834497f 0xeffc6c42 0x100393be 21.493591

4 0x830b5c1 0x1834497f 0xeffc6c42 0x100393be 21.493591

1 0x830b5d3 0x1834abb5 0xeffc0a1e 0x1003f5e2 21.495601

2 0x830b5d3 0x1834abb5 0xeffc0a1e 0x1003f5e2 21.495601

3 0x830b5d3 0x1834abb5 0xeffc0a1e 0x1003f5e2 21.495601

4 0x830b5d3 0x1834abb5 0xeffc0a1e 0x1003f5e2 21.495601

1 0x830b5dd 0x18350d7f 0xeffba85e 0x100457a2 21.497603

2 0x830b5dd 0x18350d7f 0xeffba85e 0x100457a2 21.497603

3 0x830b5dd 0x18350d7f 0xeffba85e 0x100457a2 21.497603

4 0x830b5dd 0x18350d7f 0xeffba85e 0x100457a2 21.497603

1 0x830b5e3 0x183585e7 0xeffb2ffc 0x1004d004 21.500068

2 0x830b5e3 0x183585e7 0xeffb2ffc 0x1004d004 21.500068

3 0x830b5e3 0x183585e7 0xeffb2ffc 0x1004d004 21.500068

4 0x830b5e3 0x183585e7 0xeffb2ffc 0x1004d004 21.500068

1 0x830b5ed 0x1a10bb9b 0xee1ffa52 0x11e005ae 23.991535

2 0x830b5ed 0x1a10bb9b 0xee1ffa52 0x11e005ae 23.991535

3 0x830b5ed 0x1a10bb9b 0xee1ffa52 0x11e005ae 23.991535

4 0x830b5ed 0x1a10bb9b 0xee1ffa52 0x11e005ae 23.991535

1 0x830b5fb 0x1a111f65 0xee1f9696 0x11e0696a 23.993578

2 0x830b5fb 0x1a111f65 0xee1f9696 0x11e0696a 23.993578

3 0x830b5fb 0x1a111f65 0xee1f9696 0x11e0696a 23.993578

4 0x830b5fb 0x1a111f65 0xee1f9696 0x11e0696a 23.993578

1 0x830b607 0x1a118115 0xee1f34f2 0x11e0cb0e 23.995577

2 0x830b607 0x1a118115 0xee1f34f2 0x11e0cb0e 23.995577

3 0x830b607 0x1a118115 0xee1f34f2 0x11e0cb0e 23.995577

4 0x830b607 0x1a118115 0xee1f34f2 0x11e0cb0e 23.995577

1 0x830b611 0x1a11e2c7 0xee1ed34a 0x11e12cb6 23.997577

2 0x830b611 0x1a11e2c7 0xee1ed34a 0x11e12cb6 23.997577

3 0x830b611 0x1a11e2c7 0xee1ed34a 0x11e12cb6 23.997577

4 0x830b611 0x1a11e2c7 0xee1ed34a 0x11e12cb6 23.997577

The ZMQ0 banks:

#banks:5 Bank list:-ZMQ0W200W201W202W203-

Bank:ZMQ0 Length: 40(I*1)/10(I*4)/10(Type) Type:Unsigned Integer*4

1-> 0x000a5f1c 0x000000c4 0x00000001 0x0ebd5273 0x00000001 0x00010001 0xffffffff 0x00000000

9-> 0xffff0000 0x00000000

------------------------ Event# 10 ------------------------

#banks:5 Bank list:-ZMQ0W200W201W202W203-

Bank:ZMQ0 Length: 40(I*1)/10(I*4)/10(Type) Type:Unsigned Integer*4

1-> 0x000a5f1d 0x000000c5 0x00000001 0x0ebd5279 0x00000001 0x00010001 0xffffffff 0x00000000

9-> 0xffff0000 0x00000000

[dsproto@ds-proto-daq dsproto_daq]$ |

|

24

|

28 Mar 2019 02:18 |

Pierre | Configuration | Trigger | Test |

|

|

25

|

03 Apr 2019 15:11 |

Thomas | Routine | Software | CERN SSO proxy for ds-proto-daq |

Pierre and I got the CERN proxy setup for the Darkside prototype.

Using your CERN single-sign-on identity, you should be able to login to this page

https://m-darkside.web.cern.ch/

and see our normal MIDAS webpage.

The CERN server is proxying the port 80 on ds-proto-daq. You can also see all the other services through the

same page:

elog:

https://m-darkside.web.cern.ch/elog/DS+Prototype/

chronobox:

https://m-darkside.web.cern.ch/chronobox/

js-root:

https://m-darkside.web.cern.ch/rootana/

_________________________________

Technical details

1) We followed these instructions for creating a SSO-proxy:

https://cern.service-now.com/service-portal/article.do?n=KB0005442

We pointed the proxy to port 80 on ds-proto-daq

2) On ds-proto-daq, we needed to poke a hole through the firewall for port 80:

firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="188.184.28.139/32" port

protocol="tcp" port="80" accept"

firewall-cmd --reload

[root@ds-proto-daq ~]# firewall-cmd --list-all

public (active)

...

rule family="ipv4" source address="188.184.28.139/32" port port="80" protocol="tcp" accept

This firewall rule is pointing to some particular IP that seems to be the proxy side of the server:

[root@ds-proto-daq ~]# host 188.184.28.139

139.28.184.188.in-addr.arpa domain name pointer oostandardprod-7b34bdf1f3.cern.ch.

It is not clear if this particular IP will be stable in long term.

3) We needed to modify mhttpd so it would serve content to hosts other than localhost. So changed mhttpd

command from

mhttpd -a localhost -D

to

mhttpd -D |

|

26

|

03 Apr 2019 15:31 |

Thomas | Routine | Software | test of elog |

The last elog didn't go out cleanly. Modified the elogd.cfg to point to the proxy. Try again. |

|

27

|

08 Apr 2019 08:31 |

Thomas | Routine | General | General work - day 1 at CERN |

Notes on day:

1) Fixed the problem with the network interfaces. Now the computer boots with the correct network configuration; outside world visible

and private network on.

2) fan tray on VME crate seemed to be broken. Got another VME crate from pool and installed it. This VME crate seems to be working

well.

3) Recommissioned the DAQ setup. Found a couple small bugs related to the V1725 self-trigger logic. Fixed those and the V1725 self-

triggers seem to be working correctly.

4) Tried to install new CDM from TRIUMF (with ssh access), but clocks didn't stay synchronized. Will bring module back to TRIUMF.

5) Added some code to V1725 frontend for clearing out the ZMQ buffers of extra events at the end of the run. This is to protect

against the case where the chronobox is triggering too fast for the V1725s. |

|

28

|

10 Apr 2019 05:40 |

Thomas | Routine | General | General work - day 3 at CERN |

Several points:

1) Gave a series of tutorial on DAQ to DS people yesterday and today. Got a bunch of feedback, which I will pass on when I'm back at TRIUMF.

2) The computer ds-proto-daq was offline when I got in to lab this morning. Hmm, not clear what is wrong with computer. Didn't happen the

first day. Maybe another power blip? Maybe we need a UPS for this DAQ machine, to protect it from power blips.

3) Using instructions from Luke, reconfigured the CDM to use the clock from the chronobox.

4) Added scripts for putting the chronobox and the CDM into a sensible state. Scripts are

/home/dsproto/online/dsproto_daq/setup_chronobox.sh

/home/dsproto/online/dsproto_daq/setup_cdm.sh

The scripts need to be rerun whenever the chronobox or VME crate are power cycled.

5) Fix some bugs and added some new plots to online monitoring. In particular, added a bunch of plots related to the chronobox data.

6) Found some problems with monitoring of chronobox trigger primitives, which I passed onto Bryerton. |

|

29

|

11 Apr 2019 08:20 |

Thomas | Routine | Trigger | Missing ZMQ banks |

I have done a couple longer tests of the DAQ setup. The runs were done with a high trigger rate of ~60Hz, with

the V1725 asserting their busy to throttle the trigger.

I found that after a couple hours (~5hours) that we would no longer be getting ZMQ packets from the chronobox.

You can see this with error message like

15:29:04.092 2019/04/11 [feov1725MTI00,ERROR] [feoV1725.cxx:1033:read_trigger_event,ERROR] Error: did not

receive a ZMQ bank. Stopping run.

10:26:37.158 2019/04/11 [mhttpd,INFO] Run #657 started

If I start a new run I am still missing ZMQ packets. However, if I restart the frontend program (and hence

re-initialize the ZMQ link), then the chronobox does start sending triggers again. So it may be more of a

problem with the ZMQ setup in the MIDAS frontend. Needs more investigation. |

|

30

|

02 Jul 2019 18:01 |

Thomas | Routine | Software | MIDAS running again |

Darkside people seem to be doing some tests at CERN next week. It looks like they aren't going to use our DAQ (they will I think just use CAEN tools). But we took

opportunity to make TRIUMF DAQ work again. There was a couple issues

1) I needed to inver the busy signal for input 0 to the chronobox in order to get any triggers out of the system. Not sure why; I don't think I had to do that before. But it is

working now.

2) The CERN web proxy was not initially working. Somehow I 'fixed' it by changing the proxy configuration variable of SERVICE_NAME from 'midas' to 'dummy'. I don't

understand why that fixed it. But now you can see the DAQ here:

https://m-darkside.web.cern.ch/chronobox/ |

|

31

|

11 Jul 2019 15:52 |

Thomas | Routine | Trigger | invert first chronobox busy signal |

The DAQ seems to be ready for tests with proto-0 tomorrow.

I had to invert the first busy input in order to get chronobox to produce triggers. I modified the setup script

setup_chronobox.sh

so this is now the default. Not sure why necessary.

Another interesting fact; it seems the chronobox only asks for a DHCP IP when it first boots. I think that

chronobox and ds-proto-daq were rebooted at the same time; ds-proto-daq dhcp server was probably not running

when chronobox asked for IP. chronobox got IP fine when it was power cycled. |

|

32

|

12 Jul 2019 01:27 |

Marco Rescigno | Configuration | | MB1 test in proto-0 setup/1 |

Changed custom size to 500 (20 us), tested ok run 681 |

|

33

|

12 Jul 2019 02:05 |

Marco Rescigno | Problem | Trigger | MB1 test in proto-0 setup/1 |

Tried to get laser sync signal into chronobox (clkin1 input), apparently all triggers dropped by chronobox

Problem is that the same now happen also with the regular setup where the clkin1 signal is taken by the dual timer.

|

|

34

|

12 Jul 2019 06:01 |

Marco Rescigno | Routine | General | Run 703 |

Run 703 is being writte on disk.

16 channels of board 00.

Laser trigger at 10 Hz

ended with

[feoV1725.cxx:1033:read_trigger_event,ERROR] Error: did not receive a ZMQ bank. Stopping run. ] |

|

35

|

12 Jul 2019 07:58 |

Marco Rescigno | Routine | General | MB1 test in proto-0 setup/day 2 |

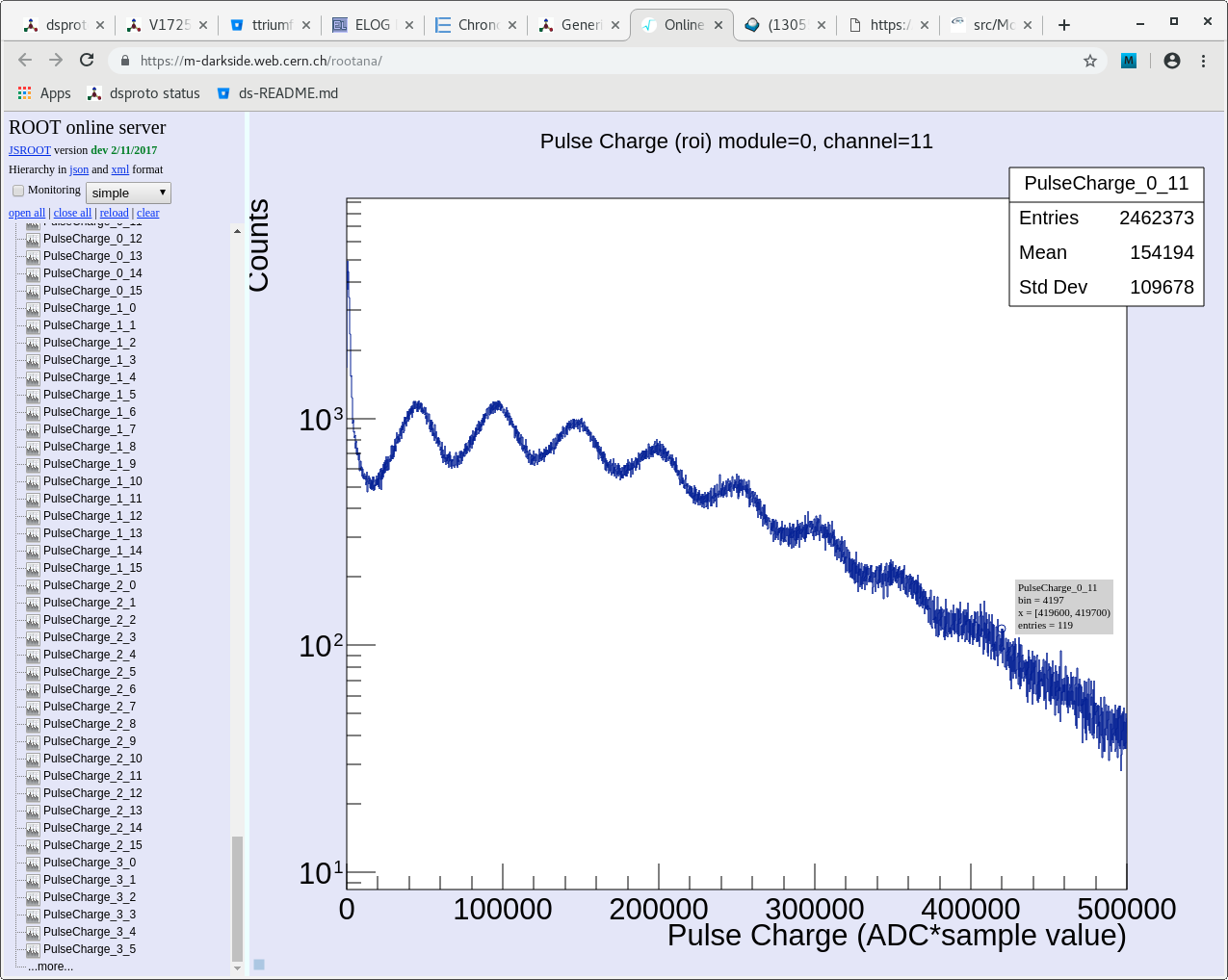

Implemented a simple charge integration on the midas display, most of the channels look almost as good as this one.

Nothing on the daq side changed, a part from the DAC value moved to 5000, allowing a slightly greater dynamic range. |

| Attachment 1: Screenshot_from_2019-07-13_17-45-14.png

|

|

|

36

|

12 Jul 2019 11:34 |

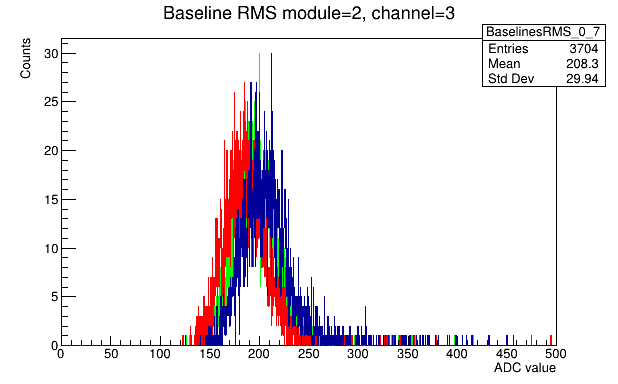

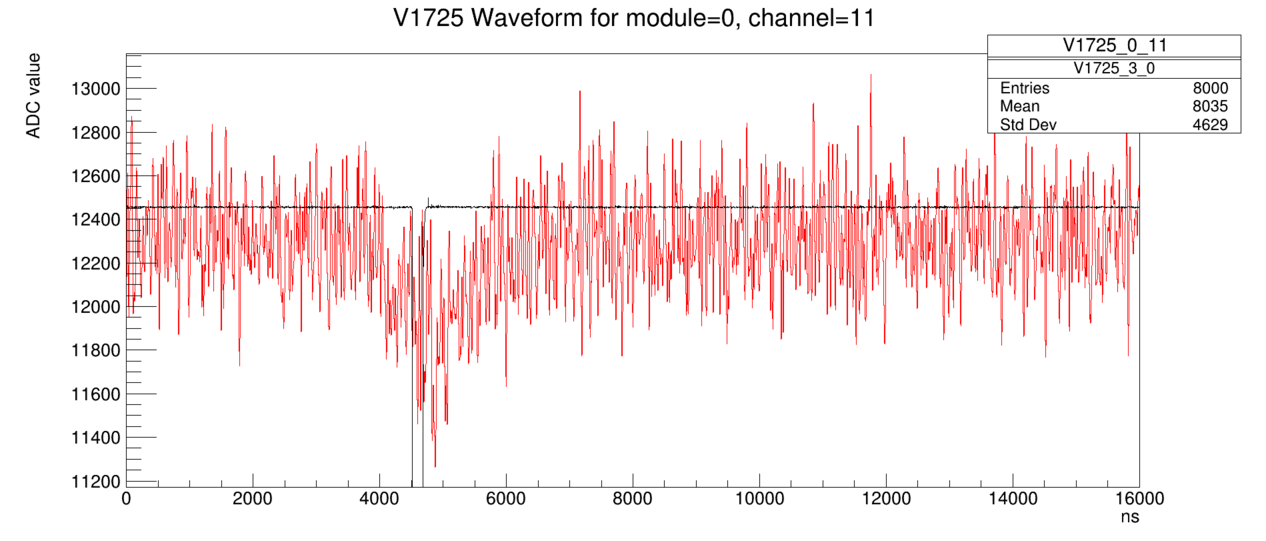

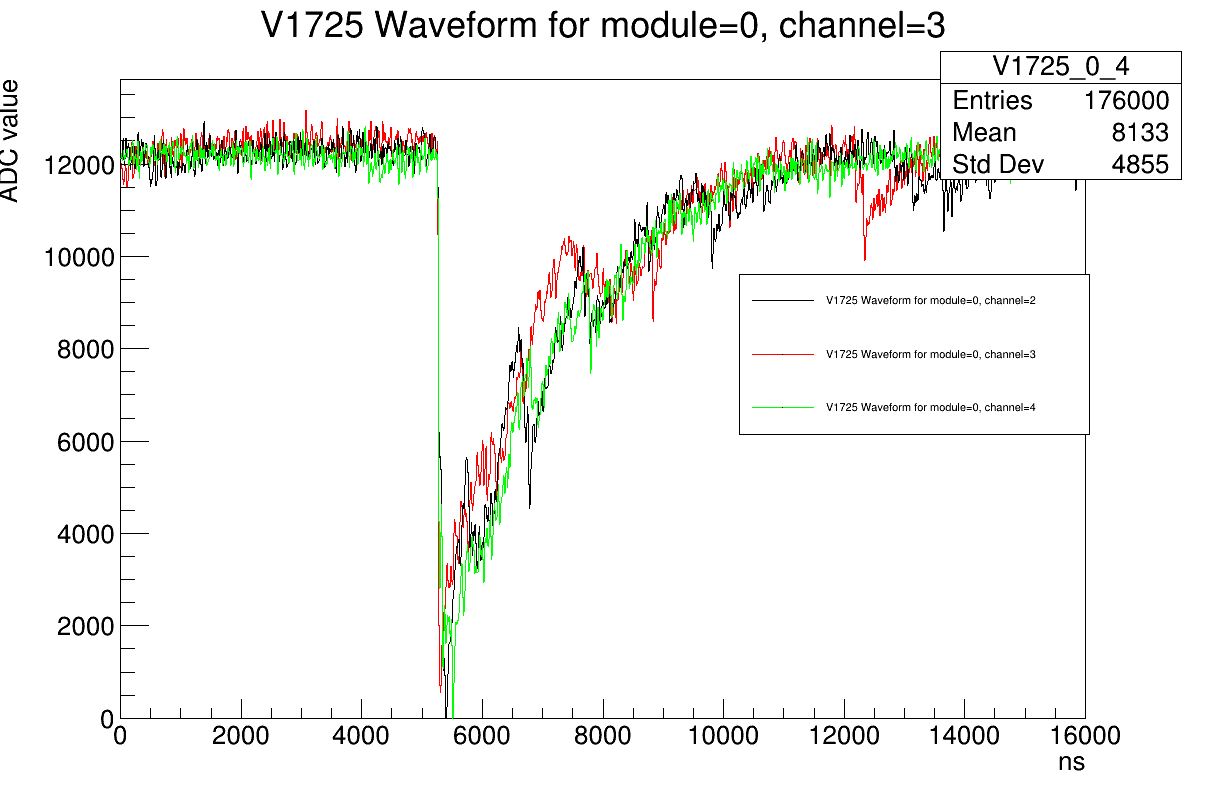

Marco Rescigno | Routine | General | MB1 test in proto-0 setup/2 Run 718 |

Run 718 has 25 channel readout Board 0 ch 0-15 and Board 0 ch 0-7.

Laser triggered, some noise, maybe not so bad as is look here.

RMS of baseline is 200 ADC count. Single PE peak is about 100-200 ADC count from baseline.

Looking carefully, some very nice scintillation event are found.

|

| Attachment 1: RMS_run718.gif

|

|

| Attachment 2: SPE.gif

|

|

| Attachment 3: Scintillation.gif

|

|

|

37

|

13 Jul 2019 14:50 |

Marco Rescigno | Routine | General | Long Laser run on disk |

Run 747 , 250 k events

as requested by alessandro 40% post trigger, acquisition window 16 us.

|

|

38

|

16 Jul 2019 01:58 |

Marco Rescigno | Routine | General | Run 766 (Laser) |

Laser Run with Vbias=65 V , new recommended value from PE group

500 k events acquired |

|

39

|

16 Jul 2019 02:39 |

Marco Rescigno | Routine | General | run 771, scintillation events triggering on ch11 and ch12 of board0 |

Trigger setup with a threshold of 1500 ADC count wrt to baseline, on just two channels.

This is also to limit the trigger to 50 Hz, since the busy still did not work.

100 K events on disk. |

|

40

|

16 Jul 2019 02:41 |

Marco Rescigno | Problem | Trigger | Busy handling |

This morning tried to get some data with Vbias=65 V, which seems better for the SiPM in MB1.

Busy handling still erratic.

At the beginning it seemed to work fine with busy_invert off and busy_enable on.

After a while (maybe after the first busy signal?) the only work around seemed to be to disable the busy_enable |